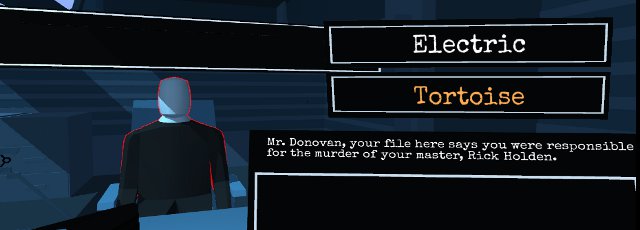

![]() Asimov's First Law of Robotics states "A robot may not injure a human being or, through inaction, allow a human being to come to harm." And yet across the desk in front of you sits a robot convicted of murder. He's polite, succinct, and wholly upfront about his role in the crime. Now it's up to you to mete out justice as you see fit. So begins Electric Tortoise, a brief, moody art game by Dillon Rogers that wears its I, Robot and Blade Runner influences on its sleeve. Sci-fi fans will appreciate its reverence for the authors it imitates and the style and themes it evokes. It's just a shame the entire game is far shorter than most demos.

Asimov's First Law of Robotics states "A robot may not injure a human being or, through inaction, allow a human being to come to harm." And yet across the desk in front of you sits a robot convicted of murder. He's polite, succinct, and wholly upfront about his role in the crime. Now it's up to you to mete out justice as you see fit. So begins Electric Tortoise, a brief, moody art game by Dillon Rogers that wears its I, Robot and Blade Runner influences on its sleeve. Sci-fi fans will appreciate its reverence for the authors it imitates and the style and themes it evokes. It's just a shame the entire game is far shorter than most demos.

Gameplay is simple. You pick dialogue options on your screen, deciding whether to grill the robot before you or offer him a sympathetic ear as you analyze the story and decide his ultimate fate. Appreciate the game design while you interrogate him; your grey, bland, yet somehow gorgeously rendered bureaucrat's office is lifted straight out of Kafka's worst nightmare. The only downside? You can blast through multiple plays in a matter of minutes. There's no losing scenario and only a few eventual outcomes, but the minimalist storytelling and evocative Unity-powered art style gives it more resonance than it's meager run time suggests. As it stands, Electric Tortoise suggests great things to come from Rogers and Co. Here's hoping there will be more from the team in the future.

Powerful, Sketchy, and quite intriguing. If the game went to the main screen without needing to be refreshed, then the game would get a 5/5. Till then, 4/5.

If it had a proper ending instead of just fading to black would also be nice, as would having it release your cursor instead of needing to press escape.

Rick Holden is a concatenation of 2 Blade Runner characters.

The office is clearly the Leon/Holden scene, ditto the title.

That "Will I dream?" isn't that a line from HAL-9000?

I'm guessing with the different responses there are many different outcomes. I chose NOT to shot the robot.

What plug-in does it use? When I try to play, it says I don't have the right plug-in, but it doesn't tell me what I need.

Hey guys, developer here.

Really appreciate the write-up and kind words.

Nathaniel, I've updated the game so it returns to the beginning. Thank you for your feedback, and thank you for playing. =]

Ethan, you need the Unity web player plug-in. You can also download the game off gamejolt.com if the web player doesn't work for you.

A thing, though...

The Three Laws of Robotics (and then the Zeroth Law) are formulated in the context that robots shall be designed only to obey these laws (i.e never have consciousness, emotions, etc), which they'll absolutely obey to humanity, and hence giving benifit to them.

Therefore, actually the situation in the game will never happen at all, simply because the robot isn't supposed to have the capability to "think" in the first place.

Another thing:

The first law always overrides the second law, so no matter what the robot is instructed to do, it cannot assist in a suicide.

Note quite, @argyblarg.

Look at The Caves of Steel and The Naked Sun. The key word is 'knowingly'. In the Asimov stories, robots are indeed accessories to murder by smuggling weapons (including one that detaches his arm to give to someone who uses it as a club), and a robot that looks like a human causes someone who is pathologically afraid of actual contact with others to be scared to death.

That being said, my take on things is that a non-malfunctioning robot should find the harm of death greater than the harm of suffering, and come down on the side of not assisting in the suicide.

Interesting. Am I right in saying that the name 'Electric Tortoise' is a nod to the Phil K Dick short story 'The Electric Ant'?

And yes, Hal 9000 says 'will I dream?' in the film version of 2010.

Mildly enjoyable.

Can I just say, I really dislike the new JIG system of the weird shaped game window box, with the review underneath. It's.....not my cup of tea.

This work is interesting and enjoyable, but I find the basic premise to be a bit annoying.

Assuming an Asimov-style three laws robot, then what I've read of those stories seems to indicate that there should be only three possible outcomes to this scenario:

1) The robot's first law duty to prevent harm through inaction would overrule its second law duty to follow orders, and it would seek outside help for John.

2) The robot's first law duty to prevent harm through inaction would be interpreted as not yet coming into effect, and while the robot still could not be ordered to kill him, John could've killed himself by sending the robot on an errand while he committed suicide.

3) The robot's first and second law duties would match perfectly in importance of application, creating a hazard condition and causing the robot to cease functioning effectively, if at all.

If the robot believed that preventing injury through inaction (the robot must not allow to John to continue suffering) outweighed preventing injury by direct action (the robot must not kill John), then it would also outweigh the second law entirely, allowing the robot to call in outside help over John's wishes, rather than assist in his suicide.

I believe that the apparent dichotomy in the first law which is being exploited here does not actually exist if we follow Asimov's examples. It could be argued that the author isn't following Asimov that closely, but the allusions are very strong.

I enjoyed the story. On my second play though, I was disappointed to find that the player's options had next-to-no bearing on the story. The script appears to have only a few words altered based on your last response and otherwise tells the exact same story. I feel this was a good base for something that could have had more layers to the story.

Update